Abstract

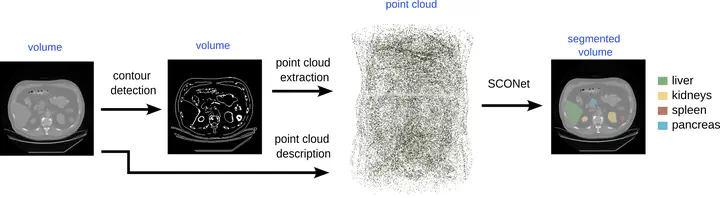

Convolutional neural networks are the de facto standard for 3D multi-organ segmentation but still exhibit significant limitations, especially regarding their computational cost, with high running time and often prohibitive memory footprint for large 3D volumes. To overcome these limitations, we propose to replace the image voxel grid with a more compact point cloud representation. Recently, in the field of 3D object reconstruction, networks learning implicit functions from an input point cloud, such as Convolutional Occupancy Networks (ConvONet), have proven their good surface representation capabilities. We therefore propose SCONet (Segmentation Convolutional Occupancy Network), a lightweight ConvONet-based network adapted to the specific task of multi-organ segmentation. SCONet takes as input a point cloud extracted from the original volume with a standard contour detection algorithm, and enriches it with geometric and photometric features. Thanks to its ability to query per organ occupancy probabilities for any point in space, SCONet can be used to predict a multi-organ segmentation map at arbitrary resolution. We evaluate our method on an abdominal CT image dataset and compare its performances with those of discrete and implicit baselines.