Abstract

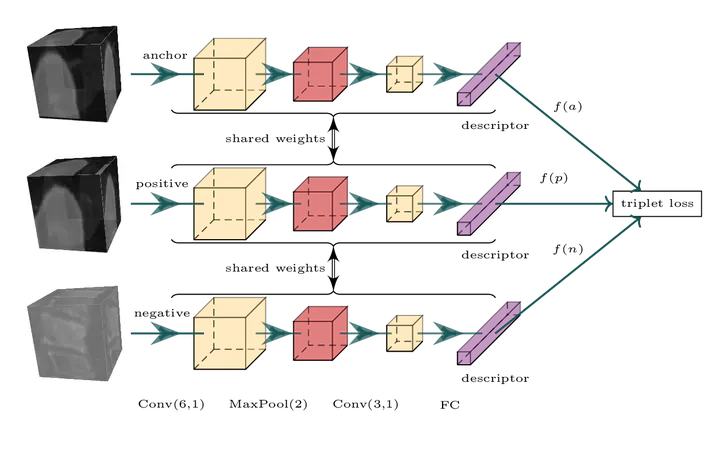

Computational anatomy focuses on the analysis of the human anatomical vari- ability. Typical applications are the discovery of differences across healthy and sick subjects and the classification of anomalies. A fundamental tool in computa- tional anatomy, which forms the central focus of this paper, is the computation of point correspondences across volumes (3D images) such as Computed To- mography (CT) volumes, for multiple subjects. More specifically, we consider automatically detected keypoints and their local descriptors, computed from the image or volume patch surrounding each keypoint. Theses descriptors are essential because they must be discriminant and repeatable [5,10]. Learned de- scriptors based on Convolutional Neural Networks (CNN) have recently shown great success for 2D images [4]. However, while classical 2D image descriptors were extended to volumes [1], recent learning-based approaches have been lim- ited to 2D detection and description. The extension to 3D descriptors was only proposed in [6], in the context of image retrieval. We propose a methodology to construct these learned volume keypoint descriptors. The main difficulty is to define a sound training approach, combining a training dataset and a loss func- tion. In short, we propose to generate semi-synthetic data by transforming real volumes and to use a triplet loss inspired by 2D descriptor learning. Our exper- imental results show that our learned descriptor outperforms the hand-crafted descriptor 3D-SURF [1], a 3D extension of SURF, with similar runtime.